How cognitive biases influence email A/B tests

We think of cognitive biases as psychological shortcuts people use to process information and make decisions. You probably use these devices (e.g., loss aversion, the fear of missing out and social proof) in your email copy and design to persuade customers to engage and convert.

These are positive uses of cognitive bias to increase the chance that customers will do what you want. However, cognitive bias can work against you if it creeps into your email testing program and skews test construction and results.

Learning to recognize and avoid these biases will help you run more reliable, data-driven testing and generate insights you can use to create more engaging and effective email messages and a stronger program overall.

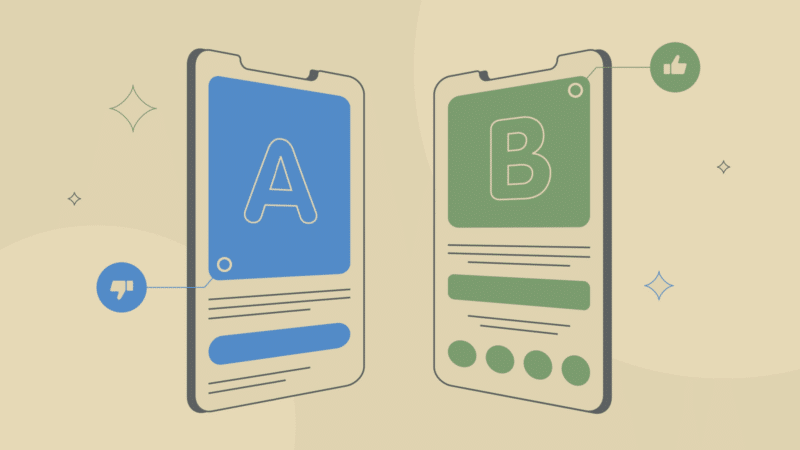

How bias affects A/B testing

A/B testing is a key part of your email marketing program. It can help you understand customer behavior and avoid wasting resources on ineffective initiatives.

However, it’s not foolproof, nor is the data it generates. Although I believe in data-driven decision-making, bias can creep in through hypothesis construction, audience selection, timeline choices or data interpretation.

We don’t intentionally create biased tests, but cognitive biases operate subconsciously. We’re all human, and nobody’s perfect.

4 common cognitive biases that can affect A/B testing

These biases might sound familiar if you’ve read my previous MarTech articles:

- “How cognitive biases shape email engagement.”

- “The perfect combination: GenAI and persuasion strategies for unbeatable A/B tests.”

Here, you’ll see how these biases can misdirect your testing efforts. I’ll introduce each one, explain the problem and show how to recognize and address it for unbiased testing.

The first two biases are often the hardest to overcome because we may not notice them in our thinking or the testing tools within our email platforms.

1. Confirmation bias

Confirmation bias can be one of the trickiest to recognize and correct. It involves long-held beliefs and our unwillingness to admit we’re wrong.

Here’s a useful definition of confirmation bias in testing:

“Confirmation bias is a person’s tendency to favor information that confirms their assumptions, preconceptions or hypotheses whether these are actually and independently true or not.”

Example hypothesis

- “Personalized subject lines will always outperform generic subject lines in open rates because previous campaigns showed a lift in engagement when using personalization.”

This is biased because it doesn’t allow for the possibility that personalization might not be the driving factor behind increased engagement or that different audience segments might respond differently.

How to avoid it

Frame tests neutrally, focusing on discovery:

- “Using personalized subject lines (e.g., ‘John, Here’s Your Exclusive Offer!’) versus non-personalized subject lines (e.g., ‘Exclusive Offer Inside!’) will affect open rates, but we will also analyze click-through rates to determine if personalization influences deeper engagement.”

This is unbiased because it doesn’t presume personalization alone is the driving force behind higher engagement and expands the testing scope to include other factors.

2. The primacy bias

This bias occurs when early test results influence decision-making — like calling an election with only 10% of the votes counted. Unlike confirmation bias, which stems from personal beliefs, primacy is reinforced by testing tools, as I’ll explain later.

Biased example

- “The winning variation of an A/B test can be determined within the first 4 hours because the highest open rates always happen immediately after send.”

How to avoid it

Set a minimum test duration based on when you typically receive the maximum conversions. Other factors can also come into play, including where your customers live, especially if they cross time zones or national borders. With more extensive lists, offers or products that require longer consideration time and more diverse customer bases, tests should run longer:

- “Measuring results after 7 days will provide a more reliable view of A/B test performance than declaring a winner within the first 24 hours because delayed engagement trends may affect the outcome.”

This approach assures that your interpretation of the results will include the broadest range of responses.

ESP-based testing tools often reinforce primacy bias through the “10-10-80” method. If your platform includes a testing feature, it likely follows this approach or a variation of it:

- 10% of your audience receives the control message.

- Another 10% gets the variant.

- The platform automatically selects a winner and sends it to the remaining subscribers within hours.

However, the 10-10-80 method relies upon sending the winner within 2—24 hours. Thus, you’re making decisions based on potentially inaccurate results — especially if most of your conversions don’t fully come in until seven days after the campaign was sent. A 50-50 split is likely to be more accurate.

Dig deeper: Why you should track your email’s long tail to measure success (plus a case study)

3. Status quo or familiarity bias

These biases lead us to stick with what we know rather than explore new possibilities.

Status quo bias keeps things unchanged, even when a change could improve engagement or conversions. In testing, this often means focusing on minor tweaks — like button color or subject lines — rather than experiments that could reshape our understanding of customers.

Familiarity bias, or fear of the unknown, makes us favor what feels reliable and trustworthy, even if less familiar options could be more effective.

Biased example

- “A subject line focusing on emotion for Mother’s Day will generate more clicks than a subject line focusing on saving money because research shows emotion is more effective when associated with events that have strong emotional connections.”

How to avoid it

This test is technically acceptable as far as it goes — which isn’t far enough. It doesn’t do enough to connect the subject line focus to a recipient’s propensity to click. Why not expand your scope to look for deeper reasons?

It’s a risky proposition to test the potential for sweeping changes in your messaging plan but don’t let this preference for status quo and familiarity stop you from trying:

- “An email message focusing on emotion for Mother’s Day will generate more clicks than an email message focusing on saving money because research shows emotion is more effective when associated with events that have strong emotional connection.”

A broader test like this is still possible within the scientific A/B testing framework, even though it includes multiple elements like subject line, images, call to action and body copy. That’s because you are testing one concept — saving money — against another.

This is a key aspect of my company’s holistic testing methodology. This approach goes beyond simply testing one subject line against another. It uncovers what truly motivates customers to act. The insights gained can reveal valuable information about the audience and inform future initiatives beyond testing.

4. Recency bias

With recency, we overvalue recent trends in performance data against what we have learned in previous testing and different testing environments. The danger is that we might generalize the results across our email marketing program without knowing whether this is appropriate.

Biased example

Consider the test created in the above loss-aversion example focused on motivations for Mother’s Day messaging. Even with the fix that expands the focus beyond the subject line, it would be a mistake to assume that emotion beats more rational appeals, like saving money all the time.

Suppose you ran that test the day before Mother’s Day. You might indeed find that saving money generates more clicks than emotion. But does that mean kids are more cost-conscious than emotionally connected to their mums? Or could anxiety among your procrastinating subscribers be the motivator?

But even if emotion is the big winner in this instance, that doesn’t mean you can apply those findings across your entire email program. Assuming that playing on emotional connects will serve you equally well if you promote a giant back-to-school sale could be a big mistake.

How to avoid it

Run a modified version of this hypothesis without the emotion factor several times during your marketing calendar year and exclude emotion-laden holidays and special events from the testing period.

Dig deeper: How cognitive biases prevent you from connecting with your audience

How to design bias-resistant A/B tests

Acknowledging that you have these unconscious biases is the first step toward doing everything you can to avoid them in your A/B tests.

Develop a neutral hypothesis based on audience behavior

This will help you avoid problems like confirmation bias or recency.

Use a structured testing methodology

A strategy-based testing program that aligns with your email program goals avoids on-the-fly testing hazards like bias. I’ve discussed the basics of holistic testing in previous columns. One advantage is that it’s designed specifically for email.

Set sample sizes, data collection thresholds and test windows

Build these considerations into your testing plan to reduce the chance of introducing primacy and other biases.

Don’t review results mid-test

You’ll avoid the freak-out factor that often dooms legitimate testing. Maybe you’ve seen this happen: Someone peeks too soon, sees an alarming result and hits the “Stop” button prematurely. Remember what I said about the primacy bias, and don’t let early results lead you to a disastrous mistake.

If you’re testing an email campaign, wait until your results reach statistical significance before drawing conclusions. For email automation tests, ensure you run them for the full pre-determined, statistically significant period before making any decisions.

Dig deeper: How ‘the curse of knowledge’ may be hurting your business and what to do about it

Test with confidence and without bias

Getting started with testing can be intimidating, but it becomes addictive as well-designed tests and statistically significant results provide deeper customer insights and improve email performance.

No matter how carefully you structure your tests, A/B testing isn’t immune to bias. However, recognizing its presence, understanding your biases and following a solid testing structure can minimize its impact and strengthen your confidence in the results.

The post How cognitive biases influence email A/B tests appeared first on MarTech.

What's Your Reaction?

Like

0

Like

0

Dislike

0

Dislike

0

Love

0

Love

0

Funny

0

Funny

0

Angry

0

Angry

0

Sad

0

Sad

0

Wow

0

Wow

0